Year: 2014

This post was prompted by Michael Covel’s interview Traders’ Magazine in which he claims that trend followers don’t try to make predictions. This idea that trend followers do not forecast returns is widely and frequently repeated. It is also complete nonsense.

Every trading strategy makes forecasts. Whether these forecasts are explicit or hidden behind entry/exit rules is irrelevant. All the standard trend following systems can trivially be converted into a forecasting model that predicts returns, because they are fundamentally equivalent.

The specific formulation of the trend following system doesn’t matter, so I’ll keep it simple. A typical trend following indicator is the Donchian channel, which is simply the n-bar highest high and lowest low. Consider a system that goes long when price closes above the 100-day Donchian channel and exits when price closes below the 50-day Donchian channel.

This is the equity curve of the system applied to crude oil futures:

This system can trivially be converted to a forecasting model of the form

the dependent variable y is returns, and x will be a dummy variable that takes the value 1 if we are in a trend, and the value 0 if we are not in a trend. How do we define “in a trend”? Using the exact same conditions we use for entries and exits, of course.

We estimate the parameters and find that α ≃ 0, and β = 0.099% (with p-value 0.013). So, using this trend following forecasting model, the expected return when in a trend is approximately 10bp per day, and the expected return when not in a trend is zero. Look ma, I’m forecasting!

Even without explicitly modeling this relationship, trend followers implicitly predict that trends persist beyond their entry point; otherwise trend following wouldn’t work. The model can easily be extended with more complicated entry/exit rules, short selling, the effects of volatility-based position sizing, etc.

Footnotes

Read more Trend Followers Make Forecasts Just Like Everyone Else

Presenting the similarity between multiple time series in an intuitive manner is not an easy problem. The standard solution is a correlation matrix, but it’s a problematic approach. While it makes it easy to check the correlation between any two series, and (with the help of conditional formatting) the relation between one series and all the rest, it’s hard to extract an intuitive understanding of how all the series are related to each other. And if you want to add a time dimension to see how correlations have changed, things become even more troublesome.

The solution is multidimensional scaling (the “classical” version of which is known as Principal Coordinates Analysis). It is a way of taking a distance matrix and then placing each object in N dimensions such that the distances between each of them are preserved as well as possible. Obviously N = 2 is the obvious use case, as it makes for the simplest visualizations. MDS works similarly to PCA, but uses the dissimilarity matrix as input instead of the series. Here’s a good take on the math behind it.

It should be noted that MDS doesn’t care about how you choose to measure the distance between the time series. While I used correlations in this example, you could just as easily use a technique like dynamic time warping.

Below is an example with SPY, TLT, GLD, SLV, IWM, VNQ, VGK, EEM, EMB, using 252 day correlations as the distance measure, calculated every Monday. The motion chart lets us see not only the distances between each ETF at one point in time, but also how they have evolved.

Some interesting stuff to note: watch how REITs (VNQ) become more closely correlated with equities during the financial crisis, how distant emerging market debt (EMB) is from everything else, and the changing relationship between silver (SLV) and gold (GLD).

Here’s the same thing with a bunch of sector ETFs:

To do MDS at home: in R and MATLAB you can use cmdscale(). I have posted a C# implementation here.

Read more Visualizing the Similarity Between Multiple Time Series

When I was first starting out a couple of years ago I didn’t really track my performance beyond the simple report that IB generates. Eventually I moved on to excel sheets which grew to a ridiculous and unmanageable size. I took a look at tradingdiary pro, but it wasn’t flexible or deep enough for my requirements.

So I wrote my own (I blogged about it here): on the one hand I focused on flexibility in terms of how the data can be divided up (with a very versatile strategy/trade/tag system), and on the other hand on producing meaningful and relevant information that can be applied to improve your trading. Now I have ported it to WPF and removed a bunch of proprietary components so it can be open sourced. So…

I’m very happy to announce that the first version (0.1) of the QUSMA Performance Analytics Suite (QPAS) is now available. For an overview of its main performance analysis capabilities see the performance report documentation.

The port is still very fresh so I’d really appreciate your feedback. For bug reports, feature requests, etc. you can either use the GitHub issue tracker, the google group, or the comments on this post.

While the IB flex statements provide enough data for most functionality, QPAS needs additional data for things like charting, execution analysis, and benchmarking. By default it uses QDMS, but you can use your own data source by implementing the IExternalDataSource interface.

Currently the only supported broker is Interactive Brokers, but for those of you who do not use them, the statement importing system is flexible: see the Implementing a Statement Parser page in the documentation for more.

I should note that in general I designed the application for myself and my own style of trading, which means that some features you might expect are missing: no sector/factor attribution for stock pickers, no attribution stats for credit pickers, daily-frequency calculation of things like MAE/MFE (so any intraday trades will show zero MAE/MFE), and no options-specific analytics. All these things would be reasonably easy to add if you feel like it (and know a bit of C#), though.

Features:

- Highly detailed performance statistics

- Ex-post risk analytics

- Benchmarking

- Execution analytics

- Trade journal: annotate trades with rich text and images

Requirements:

Screenshots:

-

-

Performance overview

-

-

Per-trade stats

-

-

Monte Carlo simulation, with equity curve confidence intervals and max drawdown distribution

-

-

Benchmarking

-

-

Execution analysis

-

-

Expected shortfall

-

-

Trade annotations with rich text and images

-

-

Trade overview

-

-

Maximum adverse excursion (MAE)

-

-

Relative capital usage by strategy

-

-

Performance by instrument

-

-

Average cumulative returns per day

Read more Announcing QPAS: Open Source Performance, Risk, and Execution Analytics

And now for something completely different. A bit of macro and a bit of factor relative performance: what happens when yield spreads and small caps diverge from the S&P 500?

High Yield Spreads

First, let’s play with some macro-style data. Below you’ll find the SPY and BofA Merrill Lynch US High Yield Master II Option-Adjusted Spread (a high yield bond spread index) plotted against SPY.

Obviously the two are inversely correlated as spreads tend to widen in bear markets. Very low values are a sign of overheating. We’re still about 130 bp away from the pre-crisis lows, so in that regard the current situation seems pretty good. There’s a bit more to it than that, though.

First, divergences. When stocks keep making new highs and spreads start rising, it’s generally bad news. Some of the most interesting areas to look at are July ’98, April ’00, July ’07, and July ’11.

Also interesting is the counter-case of May ’10 which featured no such divergence: the Flash Crash may have been the driver of that dip, and that’s obviously unconnected to the macro situation in general and high yield spreads in particular.

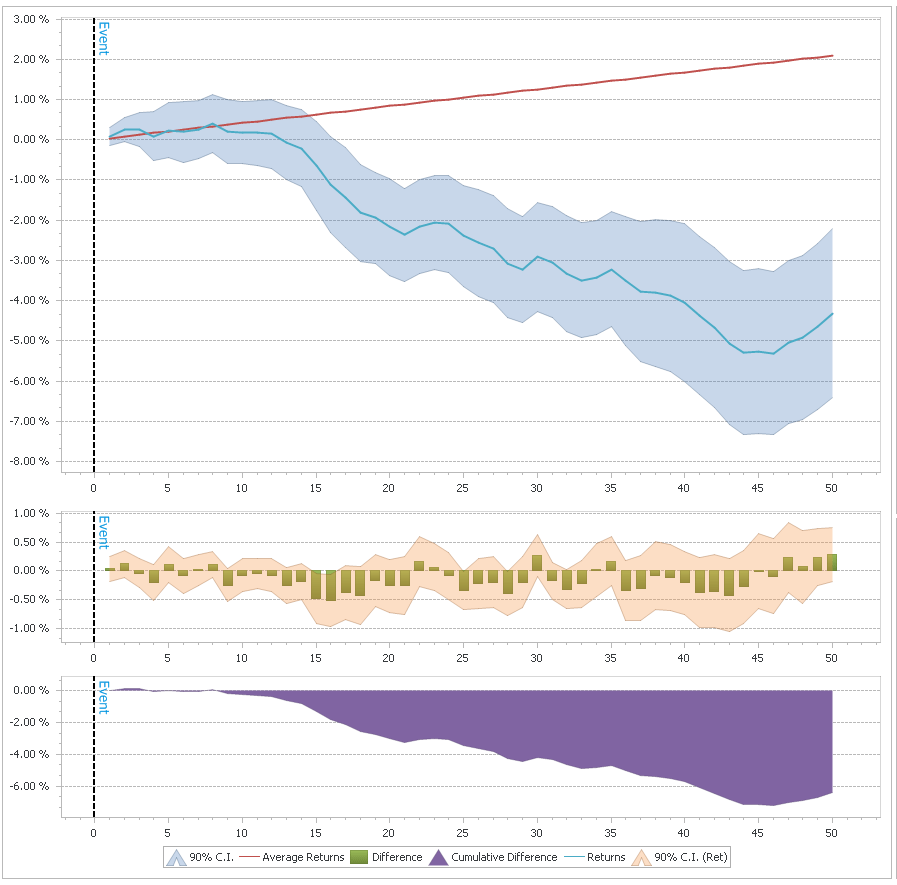

So, let’s try to quantify these divergences and see if we can get anything useful out of them…on the chart above I have marked the times when both the spread and SPY were in the top 10% of their 100-day range. As you can see, these were generally times close to the top, though there were multiple “false signals”, for example in November ’05 and September ’06. Here are the cumulative returns for the next 50 days after such a signal:

Much of this effect depends on overlapping periods, though, so it’s not as good as it looks. Still, I thinks it’s definitely something worth keeping an eye on. Of course right now we’re pretty far from that sort of signal triggering as spreads have been dropping consistently.

Size: When Small Equities Diverge

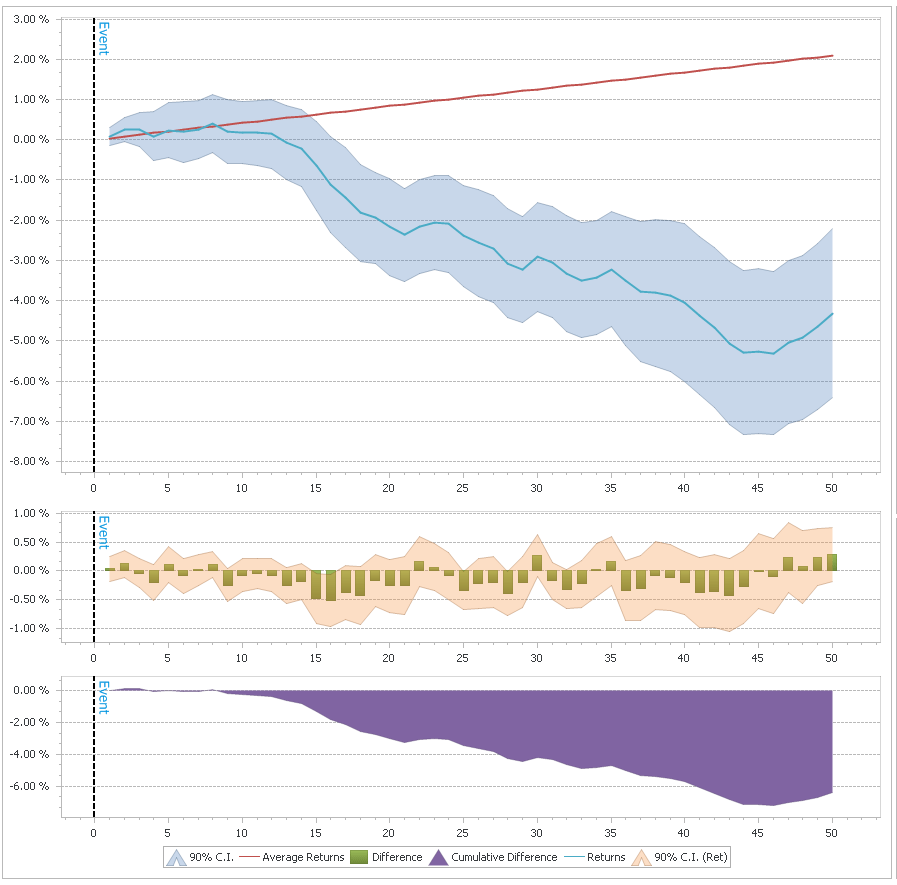

Lately we’ve been seeing the Russell 2000 (and to some extent the NASDAQ) take a dip while the S&P 500 has been going sideways with only very small drawdowns. There are several ways to formulate this situation quantitatively. I simply went with the difference between the 20-day return of SPY and IWM. The results are pretty clear: large caps outperforming is a (slightly) bullish signal, for both large and small equities.

When the ROC(20) difference is greater than 3%, SPY has above average returns for the following 2-3 weeks, to the tune of 10bp per day (IWM also does well, returning approximately 16bp per day over the next 10 days). The reverse is also useful to look at: small cap outperformance is bearish. When the ROC(20) difference goes below -3%, the next 10 days SPY returns an average -5bp per day. Obviously not enough on its own to go short, but it could definitely be useful in combination with other models.

Another interesting divergence to look at is breadth. For the last couple of weeks, while SPY is hovering around all time highs, many of the stocks in the index are below their 50 day SMAs. I’ll leave the breath divergence research as an exercise to the reader, but will note that contrary to the size divergence, it tends to be bearish.

In other news I’ve started posting binaries of QDMS (the QUSMA Data Management System) as it’s getting more mature. You can find the link on the project’s page. It’ll prompt you for an update when a new version comes out.

Read more Divergences: Yield Spreads and Size

Before you read this post, read the (Wagner award winning) Know Your System! – Turning Data Mining from Bias to Benefit through System Parameter Permutation by Dave Walton.

The concept is essentially to use all the results from a brute force optimization and pick the median as the best estimate of out of sample performance. The first step is:

Parameter scan ranges for the system concept are determined by the system developer.

And herein lies the main problem. The scan range will determine the median. If the range is too wide, the estimate will be too low and based on data that is essentially irrelevant because the trader would never actually pick that combination of parameters. If the range is too narrow, the entire exercise is pointless. But the author provides no way of picking the optimal range a priori (because no such method exists). And of course, as is mentioned in the paper, repeated applications of SPP with different ranges is problematic.

To illustrate, let’s use my UDIDSRI post from October 2012. The in sample period will be the time before that post and the “out of sample” period will be the time after it; the instrument is QQQ, and the strategy is simply to go long at the close when UDIDSRI is below X (the value on the x-axis below).

As you can see, the relationship between next-day returns and UDIDSRI is quite stable. The out of sample returns are higher across most of the range but that’s just an artifact of the giant bull market in the out of sample period. What would have been the optimal SPP range in October 2012? What is the optimal SPP range in hindsight? Would the result have been useful? Ask yourself these questions for each chart below.

Let’s have a look at SPY:

Whoa. The optimum has moved to < 0.05. Given a very wide range, SPP would have made a correct prediction in this case. But is this a permanent shift or just a result of a small sample size? Let’s see the results for 30 equity ETFs:

Well, that’s that. What about SPP in comparison to other methods?

The use of all available market data enables the best approximation of the long-run so the more market data available, the more accurate the estimate.

This is not the case. The author’s criticism of CV is that it makes “Inefficient use of market data”, but that’s a bad way of looking at things. CV uses all the data (just not in “one go”) and provides us with actual estimates of out of sample performance, whereas SPP just makes an “educated guess”. A guess that is 100% dependent on an arbitrarily chosen parameter range. Imagine, for example, two systems: one has stable optimal parameters over time, while the other one does not. The implications in terms of out of sample performance are obvious. CV will accurately show the difference between the two, while SPP may not. Depending on the range chosen, SPP might severely under-represent the true performance of the stable system. There’s a lot of talk about “regression to the mean”, but what mean is that?

SPP minimizes standard error of the mean (SEM) by using all available market data in the historical simulation.

This is true, but again what mean? The real issue isn’t the error of the estimate, it’s whether you’re estimating the right thing in the first place. CV’s data splitting isn’t an arbitrary mistake done to increase the error! There’s a point, and that is measuring actual out of sample performance given parameters that would actually have been chosen.

tl;dr: for some systems SPP is either pointless or just wrong. For some other classes of systems where out of sample performance can be expected to vary across a range of parameters, SPP will probably produce reasonable results. Even in the latter case, I think you’re better off sticking with CV.

Footnotes

Read more A Few Notes on System Parameter Permutation

Averaging financial time series in a way that preserves important features is an interesting problem, and central in the quest to create good “alpha curves”. A standard average over several time series will usually smooth away the most salient aspects: the magnitude of the extremes and their timing. Naturally, these points are the most important for traders as they give guidance about when and where to trade.

DTW Barycenter Averaging (or DBA) is an iterative algorithm that uses dynamic time warping to align the series to be averaged with an evolving average. It was introduced in A global averaging method for dynamic time warping, with applications to clustering by Petitjean, et. al. As you’ll see below, the DBA method has several advantages that are quite important when it comes to combining financial time series. Note that it can also be used to cluster time series using k-means. Roughly, the algorithm works as follows:

- The n series to be averaged are labeled S1…Sn and have length T.

- Begin with an initial average series A.

- While average has not converged:

- For each series S, perform DTW against A, and save the path.

- Use the paths, and construct a new average A by giving each point a new value: the average of every point from S connected to it in the DTW path.

You can find detailed step-by-step instructions in the paper linked above.

A good initialization process is extremely important because while the DBA process itself is deterministic, the final result depends heavily on the initial average sequence. For our purposes, we have 3 distinct goals:

- To preserve the shape of the inputs.

- To preserve the magnitude of the extremes on the y axis.

- To preserve the timing of those extremes on the x axis.

Let’s take a look at how DBA compares to normal averaging, and how the initial average sequence affects the end result. For testing purposes I started out with this series:

Then created a bunch of copies by adding some random variation and an x-axis offset:

To start out, let’s see what a simple average does. Note the shape, the distance between peak and valley, and the magnitude of the minimum and maximum values: all far from the original series.

The simple average fails at all 3 goals laid out above.

Now, on to DBA. What are our initialization options? My first instinct was to try to start the process using the simple average, above. While this achieves goal #2, the overall shape is obviously wrong.

Petitjean et. al. recommend picking one of the input series at random. On the one hand this preserves the shape well, but the timing of the extremes depends on which series happened to be chosen. Additionally, a deterministic process is preferable for obvious reasons.

My solution was to use an input series for initialization, but to choose it through a deterministic process. I first de-trend every timeseries, then record the x-axis value of the y-axis maximum and minimum values for each series. The series that is closest to the median of those values is chosen. This allows us to preserve the shape, the y-axis extreme magnitudes, and get a good idea of the typical x-axis position of those extremes:

You can find C# code to do DBA here.

Read more Reverse Engineering DynamicHedge’s “Alpha Curves”, Part 2.5 of 3: DTW Barycenter Averaging

In the first part of the series we covered dynamic time warping. Here we look at clustering. K-means clustering is probably the most popular method, mainly due to its simplicity and intuitive algorithm. However it has some drawbacks that make it a bad choice when it comes to clustering time series. Instead, we’ll use K-medoids clustering.

The main conceptual difference between K-means and K-medoids is the distance used in the clustering algorithm. K-means uses the distance from a centroid (an average of the points in the cluster), while K-medoids uses distance from a medoid, which is simply a point selected from the data. The algorithms used for arriving at the final clusters are quite different.

K-medoid clustering depends on distances from k (in this case 2) points drawn from the data.

K-means distances are taken from a centroid which is created by averaging the points in that cluster.

How does K-medoids compare to K-means? It has several features that make it superior for most problems in finance.

- K-medoids is not sensitive to outliers that might “pull” the centroid to a disadvantageous position.

- K-medoids can handle arbitrary distance functions. Unlike K-means, there is no need for a mean to be defined.

- Unlike K-means, K-medoids has no problems with differently-sized clusters.

- K-medoids is also potentially less computationally intensive when the distance function is difficult to solve, as distances only need to be computed once for each pair of points.

Note that there’s no guarantee on the size of each cluster. If you want to, it’s trivial to add some sort of penalty function to force similarly-sized clusters.

The algorithm is simple:

- Choose k points from the sample to be the initial medoids (see below for specific methods).

- Give cluster labels to each point in the sample based on the closest medoid.

- Replace the medoids with some other point in the sample. If the total cost (sum of distances from the closest medoid) decreases, keep this new confirugration.

- Repeat until there is no further change in medoids.

Initialization, i.e. picking the initial clusters before the algorithm is run, is an important issue. The final result is sensitive to the initial set-up, as the clustering algorithm can get caught in local minimums. It is possible to simply assign labels at random and repeat the algorithm multiple times. I prefer a deterministic initialization method: I use a procedure based on Park et al., the code for which you can find further down. The gist of it is that it selects the first medoid to be the point with the smallest average distance to all other points, then selects the remaining medoids based on maximum distance from the previous medoids. It works best when k is set to (or at least close to) the number of clusters in the data.

An example, using two distinct groups (and two outliers):

Initial medoids with k=2.

The first medoid is selected due to its closeness to the rest of the points in the lower left cluster, then the second one is selected to be furthest away from the first one, thus “setting up” the two obvious clusters in the data.

In terms of practical applications, clustering can be used to group candlesticks and create a transition matrix (also here), to group and identify trading algorithms, or for clustering returns series for forecasting (also here). I imagine there’s some use in finding groups of similar assets for statistical arbitrage, as well.

Something I haven’t seen done but I suspect has potential is to cluster trades based on return, length, adverse excursion, etc. Then look at the average state of the market (as measured by some indicators) in each cluster, the most common industries of the stocks in each cluster, or perhaps simply the cumulative returns series of each trade at a reasonably high frequency. Differences between the “good trades” cluster(s) and the “bad trades” cluster(s) could then be used to create filters. The reverse, clustering based on conditions and then looking at the average returns in each cluster would achieve the same objective.

I wrote a simple K-medoids class in C#, which can handle arbitrary data types and distance functions. You can find it here. I believe there are packages for R and python if that’s your thing.

Read more Reverse Engineering DynamicHedge’s “Alpha Curves”, Part 2 of 3: K-Medoids Clustering